If we had a more progressive (linear, like this /, and not like this _/) personalized difficulty for all computing nodes, small power computation computers could have more chance to write blocks.

We must have a curve like above, because if we had a progressive one so that personalized difficulty mirrors your stuff CPU power, then if you suddently change your stuff you suddently have the power to compute a block very quickly.

A group could exploit this possibility to make a real mess. At least if we use a common difficulty, it reduces the number of potential attackers to the people with high computation power. And as soon as they compute a block, they get excluded from the computation for several turns, giving room for lower computers to have a chance.

I don’t mean removing common difficulty.

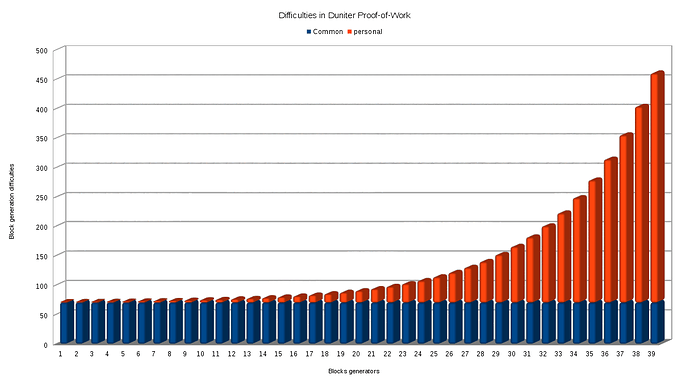

I mean a common base plus an exponential personal difficulty turning circularly:

Difficulty = common difficulty + personal difficulty

Like in this graph:

OK then this depends on the values chosen for the proof-of-work difficulty formula.

More precisely, we have to take a particular attention on parameter percentRot. If it has a value > 1, we have a curve like mine. But if its value is [0;1], we can obtain a curve like yours.

Or we could even change the formula, but this would be a protocol fork/evolution.

N.B.: I’ve though this formula to be kinda punitive, exlusive for people who computed the last blocks, so we indeed have a rotation. If we make it smoother, we take the risk to have a wider ranger of last issuers able to compute a block early again.

Ok, now I understand ![]()

Testnet currency use percentRot = 66%.

So, it means 33% of recent issuers will have huge generation difficulty?

If we reduce percentRot parameter, it will be more linear like the curve on my graph.

Exactly!

You should be right.

If we put percentRot parameter to 0%, there will be a larger range of progressive difficulties which will decrease for new blocks added to the blockchain.

But, for the last issuer, this difficulties must go from his previous difficulties to the maximum one.

So, I don’t think we take that risk:

That’s not a problem if last issuer get maximum difficulties and not in a circular way.

With this rule, we could maybe reduce common difficulties for small CPU issuers.

Common difficulties can’t be removed because it regulate blockchain writing speed to have almost a block per every five minutes.

We could have small CPU issuer mainly staying on the left of the graph and high CPU issuers staying on right of the graph.

I don’t understand why I’ve said that, it is completely wrong! The formula is:

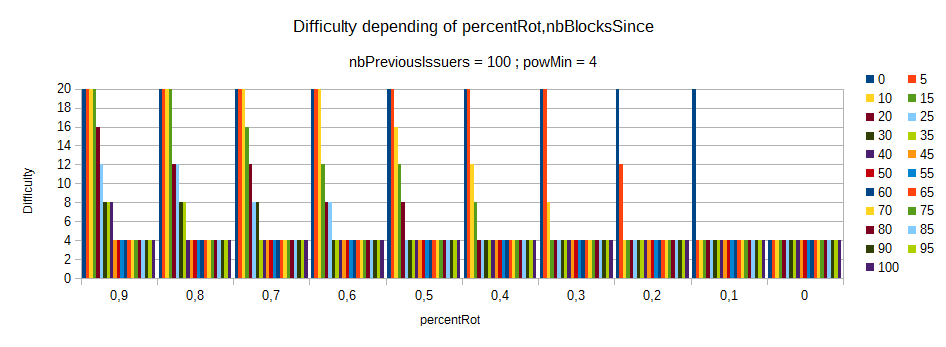

DIFF = MAX[PoWMin;PoWMin*FLOOR(percentRot*(1+nbPreviousIssuers)/(1+nbBlocksSince))]

If I trace this on a a graph using percentRot.ods (21,2 Ko), I get:

Each serie = a value of nbBlocksSince. So the very first column of each percentRot value is nbBlocksSince = 0.

We get a flat difficulty: the same for everyone.

Well yes it is a problem, look at this graph: if we choose percentRot = 0.1, then the supernode with high CPU power comes back very quickly with a common difficulty, so it will be very likely that he will be able to compute the next block very soon, and before many others have even computed a block in days because it comes back, and back again too quickly.

While choosing a high value of percentRot make it stay for a longer time in the “high difficulty” side.

The problem with small CPU issuer is how much they are smaller the common average CPU in the network. If we mainly have high CPU power, then small CPU devices will not compute a lot of blocks, whatever is our rule, IMHO.

Ok, so, we must take a high percentRot parameter close to 100%.[quote=“cgeek, post:9, topic:924”]

The problem with small CPU issuer is how much they are smaller the common average CPU in the network. If we mainly have high CPU power, then small CPU devices will not compute a lot of blocks, whatever is our rule, IMHO.

[/quote]

Yep, that’s right.

Small CPU will always face at least common difficulty which is it-self determined by CPU power of the pool of issuers.

So, there is a kind of CPU power war. Lower than in mining-currencies.

If many issuers will use ASIC or whatever powerfull CPU (used actually for mining Bitcoin), blocks will be generated to fast, then common difficulty will growth to reduce blockchain writing speed, then small (ARM) and medium CPU (x86, 2GHz) could/will be excluded.

I think ASICs cannot be used here, because it has not been maid for a crypto-signature usage, but for hashing, which is a slightly different thing.

We will have to take care of new kind of powerfull CPU which could create power war in Duniter currencies.

Whatever, we have to launch our first libre currency  …we will see that later

…we will see that later

Awesome:

curl address:port/blockchain/difficulties

{

"block": 11072,

"levels": [

{

"uid": "vincentux",

"level": 292

},

{

"uid": "cgeek",

"level": 146

},

{

"uid": "kimamila",

"level": 73

},

{

"uid": "1000i100",

"level": 73

},

{

"uid": "mmu_man",

"level": 73

},

{

"uid": "matiou",

"level": 73

},

{

"uid": "moul",

"level": 73

},

{

"uid": "fluidlog",

"level": 73

},

{

"uid": "greyzlii",

"level": 73

},

{

"uid": "poka",

"level": 584

},

{

"uid": "Galuel",

"level": 73

},

{

"uid": "cuckooland",

"level": 73

},

{

"uid": "mactov",

"level": 146

}

]

}